Using CNN for Speech Emotion Recognition – What’s wrong with it?

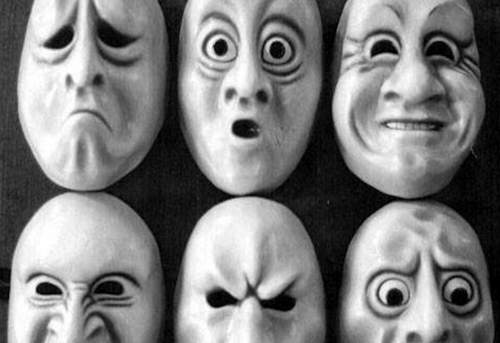

A great deal of effort has been made in facial emotion recognition in the past years. This is understandable since someone’s face is what we are presented in most communicative situations and it is what we focus on to determine what a person is “up to”. Humans are experts at determining individual’s most subtle facial expressions and how they change over. Even in the absence of sound, as in the 1900’s silent movies, actors were able to convey emotions simply by altering facial expressions and posture. Also note that facial expressions are universally true (apart from some minor cultural differences often due to social conventions such as restraint).

Bodily changes are not the only way to outwardly express feelings. Speech is another way which is often used in combination with gesture or facial expression. There are a multitude of potential applications of speech emotion recognition, from making speech assistant more “human” to police applications to determine the truthfulness of a suspect.

In this post, we investigate how convolutional neural networks can be used to classify emotions in speech. To do so, we make use of the RAVDESS sound database ( “The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS)” by Livingstone & Russo is licensed under CC BY-NA-SC 4.0.) which consists of speech files recorded from 24 actors expressing 8 different emotions in to sentences, “Kids are talking by the door” and “Dogs are sitting by the door”.

Linguistics and para-linguistic features

Bodily expressions (posture) and facial expressions (mimics) are fairly universal. Yes, there can be behavioral conventions typical to some parts of the world but apart from that, it is reasonable to say that everyone will recognize an angry individual regardless of where he/she comes from. It is however not evidently so when it comes to speech. We must make a distinction between the two different parts of any spoken language, namely the language in itself, with its grammar, vocabulary and context and “what” lies above the language (how it is expressed). The first part is referred to as “linguistics” and the latter as “paralinguistic”. While we may not have any clue of what is being said because we lack knowledge of the linguistics, we may have an idea of the emotions being expressed, even if paralinguistic features may differ between languages. Individuals in different countries may perceive languages as harsher than other but for natives of a specific country, their language possesses very specific paralinguistic features that allow everyone (apart from very particular individuals) to understand the emotions being conveyed in speech.

We, the authors, do not make any claims on being experts in linguistics or paralinguistics and our cumulated knowledge in foreign languages is limited to 6 languages. Our aim is to investigate whether it is possible to train a convolutional neural network to recognize emotions sufficiently well to use it in a range of applications.

Method

The dataset that we are using consists of the expression of two sentences, “Kids are playing by the door” and “Dogs are sitting by the door”, repeated twice using 8 expressions (neutral, calm, happy, sad, angry, fearful, disgust and surprised) with two levels of intensity (apart for the neutral emotion). 24 actors (12 women and 12 men) acted these sentences with the said emotions, giving thus a total of 1440 sound files. We chose to convert all files into spectrograms. We remind the reader that a sound spectrogram (or sonogram) is a visual representation of a signal. A Fast Fourier transform is applied to a sound which essentially separates the frequencies and amplitudes of its components. The result is then displayed visually, with degrees of amplitude, at various frequencies by time.

Fig 1: Examples of RGB (colored) sound spectrograms for the same sentence (Kids are talking by the door) spoken in neutral tone. The frequency scales are linear, logarithmic and Melodic (Mel).

As in most domains, the need of large datasets is a prerequisite for good results and sufficiently well trained models. The problem is that we only have 1 440 spectrograms. So, to generate sufficiently many examples we divided the entire utterance of each phrase into 1 second blocks with a sliding window of 10 millisecond. All in all, this generates 75 000 spectrograms. We then decided to divide our dataset into four different parts. Given that we had 24 actors, we chose to extract 6 of them (3 males and 3 females) to test our model on voices it hadn’t been trained on. Also, we trained the model only on the sentence “Kids are talking by the door” and kept the enacted sentences “Dogs are sitting by the door” for testing. This makes sense since we want to be able to show that the emotion recognistion is independent of what is said or who says it.

Dealing with images (our spectrograms) led us to choose one of the many Convolution Neural network approaches and our final choice fell on AlexNet. There are several reasons for which this choice (of CNN) is the best of choices. For one, the use of ReLU, instead of Tanh, adds non-linearity and speeds up the training. Also, the use of dropout helps dealing with overfitting. A slight problem is the loss of training speed, but this is as is pointed out above compensated by the use of ReLu. A last point is the use of overlap pooling to reduce the size of networks to minimize errors.

The architecture of AlexNet is the following:

Fig 2: The AlexNet architecture.

Fig 3: The AlexNet model in the Peltarion platform.

Results

We ran the model trained on 18 actors expressing the sentence “kids are playing by the door” and observed that it convergences quite quickly (15 epochs) and reached an accuracy of 99,6 %, which given the amount of data should be considered as exceptional.

As one can see from the confusion matrix above, the result is pretty impressive. But, the evaluation is done on a very particular dataset, namely the one containing the same actors and the same spoken sentence as in the training dataset.

Fig 4: Confusion matrix for the validation dataset. The values are percentages. As one can see, as long as the same sentence is spoken by the same actors as in the training set, the accuracy of the model is fairly high.

To check the performance of the model, we chose to extract 6 of the actors from the training and validation datasets, i.e. the model is not trained on these six actors (3 males and 3 females). This thus gave us several combinations to test:

- Same actors, same sentence (the result is given in the table above (fig 4))

- Same actors, other sentence (“dogs are sitting by the door”).

- Different actors, same sentence

- Different actors, other sentence (“dogs are sitting by the door”).

As we saw above, as long as the same sentence was spoken by the same actors as in the training set, everything seemed fine. Things take another turn as soon as things are changed, and the worst part about it is that the changes are minute and need to work in order for the solution to have any practical use.

What happens when you ae presenting the model the same actors speaking the second of the sentences, namely “dogs are sitting by the door”? Well, already here, the result is less than satisfying:

At this point we already see that a application using this accuracy rate would be less than reliable (even thought it is better than chance). As a last example, we test the model with to the model not presented actors speaking the sentence involving dogs:

As one can see, things get even worse in that case. What we can infer from these tables is that the method that we chose, and which is chosen in a lot of the available literature. The reason for choosing CNN:s for this kind of exercises is due to the fact that spectrograms are images and that these models were developed for image recognition. There are however huge differences between images of object and a visualization of a sound.

The problems and the possible fixes

As we have seen above, the classification results on non-seen material is not impressive (even thought it is better than chance) and there are several possible reasons, that compounded result in that the method we have chosen can be considered suboptimal. In this section we are going to discuss these issues.

Quantity and variation of data

As mentioned in the introduction we chose to use the The Ryerson Audio-Visual Database of Emotional Speech and Song (RAVDESS) dataset. It contains two expression recorded by 24 actors. To augment the data we have extracted 1 second sections of the recorded sentences letting a window slides across the spectrogram. It might seem like we managed to produce a large amount of data, but in the end, we don’t have that much information to go by. Also, it can be argued that the variation in the data is not large enough. The range of emotions being enacted is large, but what is being said isn’t.

Sound is an event in time, not in space

We transformed the dataset of sounds into spectrograms (images) and applied a CNN (AlexNet is one type of CNN) onto them, and thus treated the sounds as if they were images (scenes). Furthermore, in images, objects are single distinct entities (a box is not the sum of, say, a lamp and a chair), while sounds can be the sum of a number of different sounds. This means that it is very hard to identify different frequencies as being distinct events or the distinct events they originate from. That’s one of the problems.

Another obvious problem is which dimensions that are considered in the images. The image of an object is the projection of a three dimensional object onto a two dimensional space. The fact that the object also exists in time is not taken into account. In fact, the object is the same regardless of time. Spectrogram, however, depict a given property of sound (frequency) over times, and this is a very different thing from an image of an object in space. Thus, moving filters of a convolution layer over time is somewhat problematic without the context in which the sound (or agglomerated sounds) is produced. The context is how things were said (or what was said) prior to what is analyzed at any given moment.

This takes us to a potential solution to improve the result: Use a neural network that takes into account the past events. In other words, use a Reccurent Neural Network such as a Long Short Term Memory Network. This is the topic of a coming post.

Writers are Serge de Gosson de Varennes, Senior Data Scientist at Sopra Steria Sweden, and Micael Starck, former Data Analyst at Sopra Steria.